1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

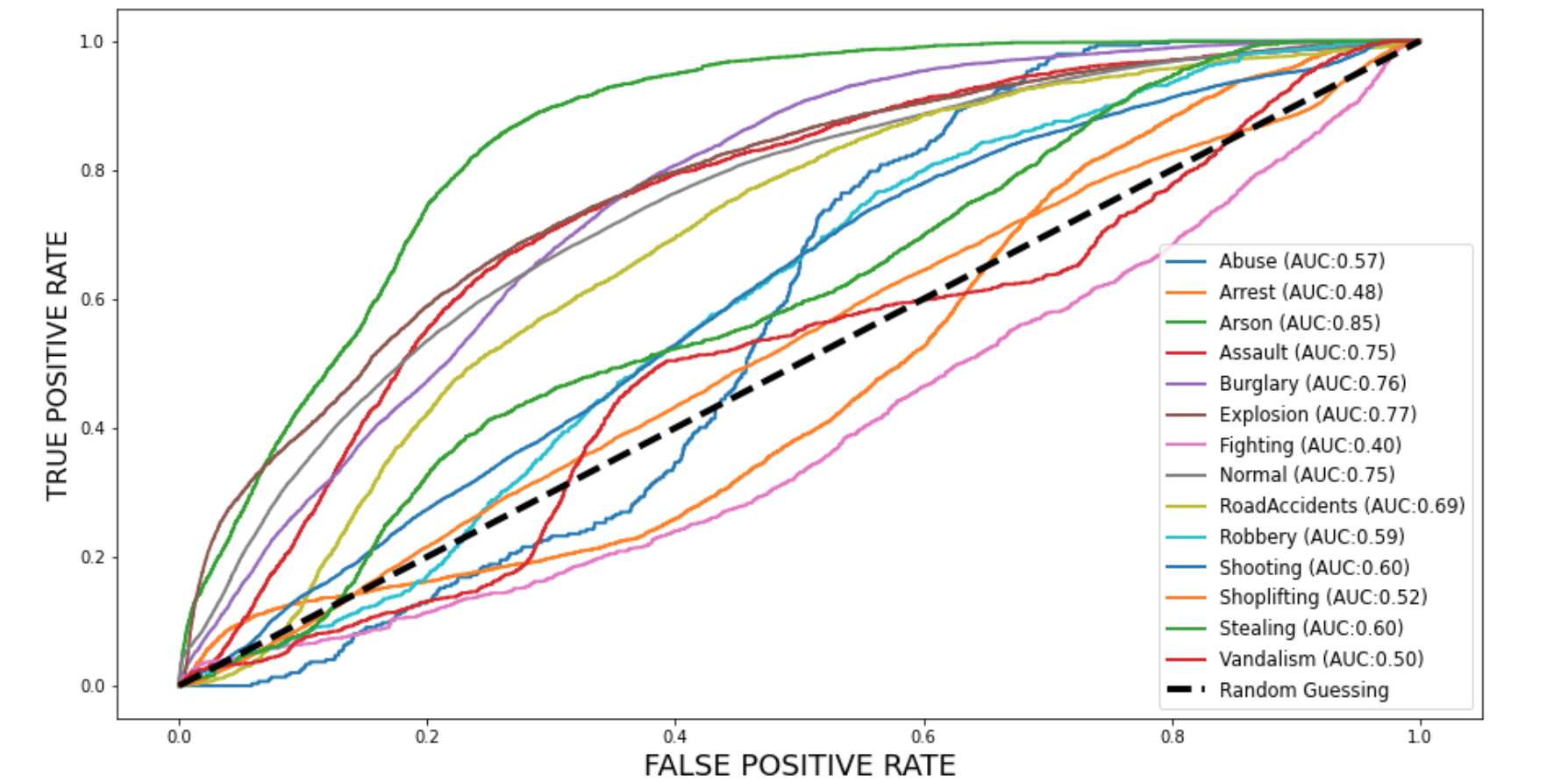

| preds = model.predict(test_generator)

y_test = test_generator.classes

fig, c_ax = plt.subplots(1,1, figsize = (15,8))

def multiclass_roc_auc_score(y_test, y_pred, average="macro"):

lb = LabelBinarizer()

lb.fit(y_test)

y_test = lb.transform(y_test)

for (idx, c_label) in enumerate(CLASS_LABELS):

fpr, tpr, thresholds = roc_curve(y_test[:,idx].astype(int), y_pred[:,idx])

c_ax.plot(fpr, tpr,lw=2, label = '%s (AUC:%0.2f)' % (c_label, auc(fpr, tpr)))

c_ax.plot(fpr, fpr, 'black',linestyle='dashed', lw=4, label = 'Random Guessing')

return roc_auc_score(y_test, y_pred, average=average)

print('ROC AUC score:', multiclass_roc_auc_score(y_test , preds , average = "micro"))

plt.xlabel('FALSE POSITIVE RATE', fontsize=16)

plt.ylabel('TRUE POSITIVE RATE', fontsize=16)

plt.legend(fontsize = 11.5)

plt.show()

|