1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

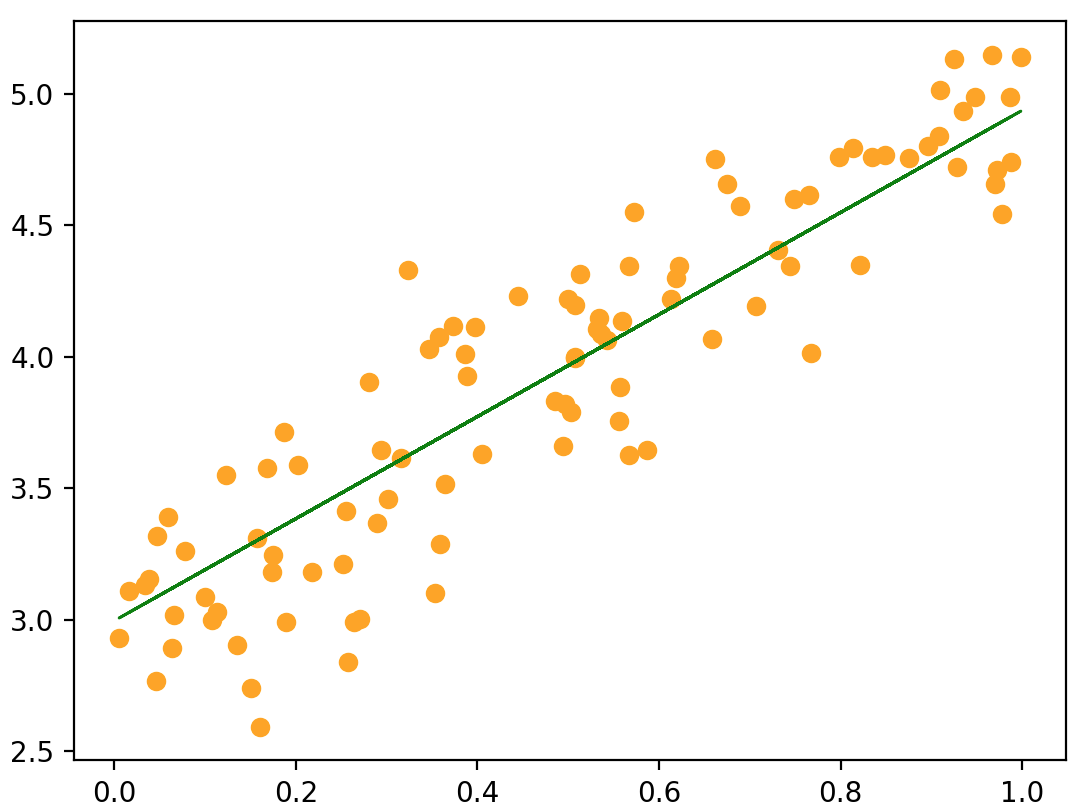

| import torch

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

import numpy as np

x_train =np.random.rand(100, 1)

y_train = 2 * x_train + 3 + np.random.randn(100, 1) * 0.3

x_test = np.random.rand(20, 1)

y_test = 2 * x_test + 3 + np.random.randn(20, 1) * 0.3

class LinearRegression(nn.Module):

def __init__(self, input_dim, output_dim):

super(LinearRegression, self).__init__()

self.linear = nn.Linear(input_dim, output_dim)

def forward(self, x):

x = self.linear(x)

return x

model = LinearRegression(1, 1)

criterion = nn.MSELoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

for epoch in range(1000):

outputs = model(torch.from_numpy(x_train).float())

loss = criterion(outputs, torch.from_numpy(y_train).float())

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 100 == 0:

print(f'Epoch [{epoch + 1}/ 1000], Loss: {loss.item()}')

plt.scatter(x_train.flatten(), y_train.flatten(), c='orange')

plt.plot(x_train.flatten(), model(

torch.from_numpy(x_train).float()).detach().numpy(), 'g-', lw=1)

plt.show()

|